This is an animated simulation based on the paper ‘The polarization within and across individuals: the hierarchical Ising opinion model’.

(You probably can’t see it properly on a small screen.)

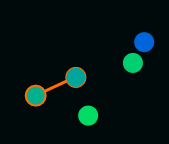

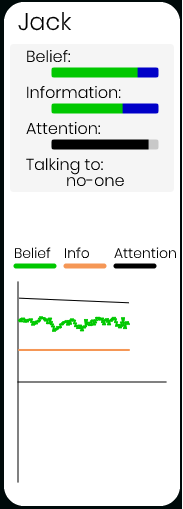

Each circle represents a person. The colour of the circle shows what that person currently believes. The more green or blue a circle, the more strongly the person aligns with the green or blue belief (respectively). The average belief of all people can be seen on the right-hand panel, as well as the recent history of the average belief.

Click on any person to see more information about them.

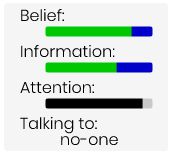

The belief bar shows how much the person supports green and blue. A completely blue bar tells you they are extremely supportive of the blue belief.

The information bar tells you the relative strength with which the information someone has supports each position.

The attention bar tells you how attentive the person is the the green/blue issue. See the technical section below to see why this is important.

‘Talking to’ gives you information that is not useful in any way.

When an orange line appears between two circles, this means they are talking about the great blue vs. green debate. In most cases, this will result in an exchange of information, which may or may not result in a change of belief (see below). This information either supports the green or blue position.

You may notice that although the average belief remains around equal support for each position, this is not because most people are indifferent to the issue. It is because the issue is very polarizing.

If you click on any person, you will see that there belief tends towards the extremes. This is easier to see if you speed up the animation.

There are many ways that the model this animation is based on seems to mirror real-life belief change. I might write more about it, but for now, see the original paper.

Technical Explanation

Here is the basic algorithm:- People move around randomly.

- At each interval (that you can change with the speed slider), a person is selected to make conversation. The probability that a person will be selected depends on the attention they are paying to the issue (i.e., how much they care about it).

- Once a person is selected, they make conversation with their nearest neighbour.

- If the conversing people are not hugely differing in opinion, they exchange information and both become more attentive to the issue.

- Everyone else’s attention to the issue decays over time.

- Before the next conversation, all peoples’ beliefs are updated based on the information they have.

For a fuller explanation, see the original paper. My version makes a few departures from the equations in the paper, but I think it broadly represents the same phenomena.

Reference

Han L J van der Maas, Jonas Dalege, Lourens Waldorp, The polarization within and across individuals: the hierarchical Ising opinion model, Journal of Complex Networks, Volume 8, Issue 2, April 2020, cnaa010, https://doi.org/10.1093/comnet/cnaa010